- Remittance

- Exchange Rate

- Stock

- Events

- EasyCard

- More

- Download

- 6th Ann

Here Is Why Nvidia Still Has More Upside

When I wrote my first article on Nvidia Corporation (NASDAQ:NVDA) more than a year ago, the stock had a hold rating among Seeking Alpha analysts with valuation being the major driver of that rating. More than 200% later, the stock is still a hold among analysts, with 11 rating it at least a buy and 24 rating a hold or less as of the time of writing. Again, the bear case seems to revolve around the lofty growth prospects for the company.

While the tripling of the stock in just over the past year should mean the hold rating is more accurate now than it was then, this article will discuss why Nvidia might still have more upside.

Recap of How Nvidia (Briefly) Became the Most Valuable Company in the World

In my first article on Nvidia written more than a year ago, I focused on remarks made by Nvidia CEO Jensen Huang stating that data centers present today were not ready for AI and that will spur capex spending of $250 billion a year (that grow) for multiple years for products and services Nvidia was essentially the only game in town in. From the article:

So not only should Nvidia be a $1 trillion market cap, but it should also topple Apple as the world’s most valuable company if what Huang said turns out to be true. The question becomes, why is Nvidia trading at just below $1 trillion, rather than at almost $3 trillion like Apple?

Well now that Nvidia did reach that $3 trillion valuation, its natural that questions arise about the sustainability of that valuation. In the risks section of that article, I mentioned that Nvidia might lack that iOS factor that Apple had and kept consumers in this article.

Nvidia’s financial metrics reveal a price-to-earnings ratio (P/E) of 70.85 and a forward P/E of 44.67, indicating high market expectations for its future earnings. This elevated P/E ratio reflects strong investor confidence in Nvidia’s future performance, especially in the AI and data center markets. However, a high P/E also implies significant downside risk if growth expectations are not met.

Thus, despite Nvidia’s high valuation, investors are willing to pay a premium, hoping the company will maintain its industry-leading position. Additionally, Nvidia’s PEG ratio stands at 1.50, suggesting the valuation is relatively reasonable given its high growth expectations. The PEG ratio, which compares the P/E ratio to the company’s earnings growth rate, is used to gauge a stock’s relative value. A PEG ratio below 1 typically indicates undervaluation, while a ratio above 1 suggests overvaluation. Nvidia’s PEG ratio implies that despite its high P/E, the company’s expected robust earnings growth makes it an attractive investment.

Analyst Beth Kindig is even more optimistic, predicting Nvidia could reach a market capitalization of $10 trillion by 2030, driven by its next-generation Blackwell GPU and CUDA software platform. She anticipates that Nvidia will dominate the AI data center market in the coming years and expand its influence in emerging sectors such as automotive. This expert forecast further enhances market expectations for Nvidia’s future stock price.

In conclusion, despite Nvidia’s current high valuation, its leadership in AI, data centers, and emerging markets, coupled with strong financial performance and growth prospects, suggests its stock price still has room to rise. Investors evaluating Nvidia should focus on its continued performance and market share expansion in these critical areas. The high P/E and PEG ratios indicate strong market expectations for future growth, while impressive profit margins and financial health provide solid support for these expectations.

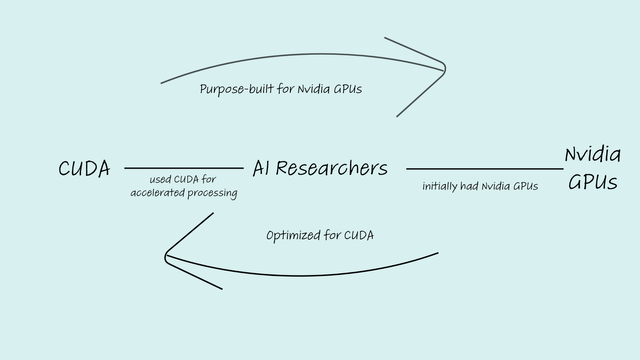

Why CUDA Could Be Nvidia’s iOS

In 2007, Nvidia developed Compute Unified Device Architecture (CUDA for short) to help use its GPUs in parallel computing for non-graphics workload. To simplify this in extremely basic terms, the main way computing is done is by solving problems one at a time (sequential computing), Nvidia didn’t just design GPUs, it built the architecture necessary to try and solve really large computing problems by breaking them down into smaller problems and solving them all simultaneously (or in parallel). CUDA, again in very basic terms, is the piece of software that helps developers tell machines that they want to use the hardware for parallel computing (which is necessary for AI workloads).

That piece of software was built with Nvidia’s GPUs in mind and vice versa. And because Nvidia was really early, CUDA became the go-to programming platform for training AI models. As a result, those who preferred working with CUDA had to use NVIDIA GPUs, and those who were using the company’s GPUs were better off using CUDA, creating a virtuous cycle for Nvidia.

In my opinion, this is a crucial part of Nvidia’s bull case and where the similarities are with Apple’s iPhone. For years, Apple traded at 10x earnings on the premise that this is just a hardware company and eventually someone will make a more compelling or cheaper piece of hardware and win all the market share. That threat never materialized precisely because of iOS. Similarly, in Nvidia’s case, it is no longer about making a better chip or a cheaper one for that matter, competitors must also solve the software part of the equation. This is arguably the more difficult part of competing with Nvidia as the longer this takes the more entrenched Nvidia will become.

Nvidia’s virtuous cycle (created by author)

If you think about Apple’s business, it’s a two-headed dragon. You buy an iPhone, a quality piece of hardware, the Apple upsells you with the services enabled by its iOS and software. Powering the iOS also means that consumers who buy an iPhone are unlikely to switch and will buy other Apple hardware products, creating a consumer lock-in. Similarly, with Nvidia, consumers buy the GPUs today because they are perceived to be the best on the market. But there is also a software component required to activate the capabilities of those GPUs. It is Nvidia’s leadership in CUDA that maintains its competitive advantage. Like with Apple, when it’s time for consumers to upgrade their GPU, they will stick to Nvidia because CUDA only works with Nvidia. And if they want AMD they will have to learn its computing software and switch the training models they created which creates a significant switching cost that is similar to that faced by Apple consumers.

Nvidia’s Data Center Opportunity is Enormous

One simple way to understand just how large Nvidia’s opportunity could be is to look at AI startup CoreWeave. The company jumped to a $19 billion valuation just a few weeks ago, thanks to being one of the main providers of Nvidia GPUs. Here is an interesting bit from the company’s blog:

With over 45,000 GPUs in our fleet, we are the largest private operator of GPUs in North America. Our GPU infrastructure is delivered from 5 data centers in Chicago, North Carolina, New Jersey and New York, and rivals the assets operated by the large cloud computing companies.

This works out to about 9,000 GPUs for the type of data center required to run AI workloads. The US International Trade Commission estimated that there were roughly 8,000 data centers worldwide in 2021. Now if you believe AI is the new internet, those 8,000 data centers are going to be refined for AI workloads (revisit the recap on Nvidia’s initial bull thesis). That means 72 million GPUs, and that’s without any growth in the number of data centers, something that looks destined to happen. At a cost of at least $30,000 per GPU, that is approximately $2.2 trillion in GPU sales. Sure that, as GPUs keep improving, less of them will be needed. But that will be more than offset by the growth in data centers and the rise in unit prices of GPUs.

CNBC recently reported that Nvidia holds 80% share of AI chips. So that is potentially $1.8 trillion in sales going to Nvidia with sky high-margins. And that’s just data center revenue, so excluding gaming for example and other verticals. So even if you assume that capex cycle takes 10 years (and it will likely take less) Nvidia’s average sales per quarter in that case ($44 billion in this case) would be almost double what it generated for the whole of 2023.

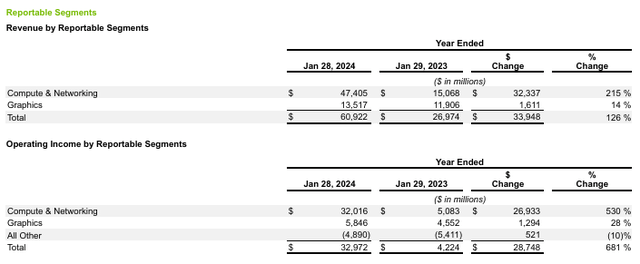

Nvidia’s financials will look vastly different in just a few years (company filings)

Let’s focus on the Compute & Networking segment given its the relevant one for my thesis. As discussed in the paragraph above, even if the AI transition takes a decade to optimize the data centers present today and assuming there is no growth in the number of data centers worldwide, Nvidia would generate an average revenue over the next 10 years of $176 billion excluding graphics. And because of CUDA, Nvidia actually can lock in customers for now.

The faster the transition to AI data centers, the more Nvidia sells given its dominant competitive position, which entrenches CUDA’s position as the platform for parallel processing, and in turn leads to more GPU sales in the future. There is also the DGX cloud business which offers AI-training-as-a-service platform and customizable AI models for the enterprise, which can help cement Nvidia’s competitive position even more. As a result, it is highly likely the company can maintain its current margins of 67% and generate operating income of $118 billion on average over the next 10 years just from compute & networking.

let’s assume its net interest income is $0 instead of the current $820 million. Slap the current corporate tax rate on those earnings and Nvidia’s income would be $93 billion. That means Nvidia is selling at 31x forward earnings assuming:

- Transition to AI takes 10 years

- There is no growth in the number of data centers worldwide.

- Gaming and other high-growth avenues like automotive make no money for 10 years.

Given how unlikely those 3 factors are, I feel that valuation has a lot of margin of safety and propels my reiterating of the buy rating

Competition is Coming

There are signs of competition coming for Nvidia’s dominant position. The first one is probably Apple Intelligence. There are two main reasons why Apple Intelligence is a threat to Nvidia. The first is that Apple Inc. (AAPL) will use its own chips, meaning Nvidia won’t make its way to Apple devices even if AI is the new internet. The second issue is that Apple will do some if not most of the AI workloads on the device. This implies that the use of Nvidia GPUs could be less than forecast if companies embrace what is in essence a type of edge AI, given Nvidia’s GPUs will mostly be in data centers.

Another issue to be wary of is the concentration of sales. There is one mystery customer that makes up a significant number of Nvidia’s sales:

Sales to one customer, Customer A, represented 13% of total revenue for fiscal year 2024, which was attributable to the Compute & Networking segment. One indirect customer which primarily purchases our products through system integrators and distributors, including through Customer A, is estimated to have represented approximately 19% of total revenue for fiscal year 2024, attributable to the Compute & Networking segment. Our estimated Compute & Networking demand is expected to remain concentrated. There were no customers with 10% or more of total revenue for fiscal years 2023 and 2022.

In a way, this is an important indication on validity of the thesis that AI is the new internet. If the thesis is valid, sales concentration should decline over time as everyone embraces AI. If it is not, then the concentration should persist and Nvidia will be at the whims of this customer. Investors must pay attention to this metric.

The growth in AMD Inc. (AMD) and Intel Corporation (INTC) GPUs is also a threat, given they use their own competing programming platform, which would undermine CUDA in the long-term if it takes off. OpenAI for example launched its own CUDA alternative. Meta meanwhile launched software that enhanced interoperability between Nvidia and AMD GPUs, a move designed to undermine CUDA. From Reuters:

Software has become a key battleground for chipmakers seeking to build up an ecosystem of developers to use their chips. Nvidia’s CUDA platform has been the most popular so far for artificial intelligence work.

However, once developers tailor their code for Nvidia chips, it is difficult to run it on graphics processing units, or GPUs, from Nvidia competitors like AMD. Meta said the software is designed to easily swap between chips without being locked in.

These efforts have yet to change the competitive dynamics in my opinion, but it is the key battle investors need to keep their eye on as they assess Nvidia.

Conclusion: Nvidia is Still Attractive

Despite the stock’s impressive run up over the past 12 months or so, Nvidia still has room to run thanks to the huge opportunity in GPU sales for the data center. Nvidia’s CUDA also provides high conviction that the company can continue to maintain its 80% share in GPUs. The biggest risk however is that big tech players are trying their hardest to dislodge Nvidia’s leadership in the computing platform part of the supply chain. Having said that, this risk is yet to materialize and so the stock remains a buy, especially on any dips. The market is not a zero-sum game by any means however, and these attempts to dislodge CUDA could help AMD and Intel as well.