- Remittance

- Exchange Rate

- Stock

- Events

- EasyCard

- More

- Download

Nvidia: Upside Potential Is Greater Than You May Think

Nvidia Corporation (NASDAQ:NVDA) stock has multiplied by 4x since spring 2023, when this incredible AI-driven rally really began. The rally has also attracted many skeptics, doubting the stock’s ability to continue rallying from here.

The truth is that Nvidia is much more than a semiconductor company selling popular AI chips, rather it has built an extensive AI platform. Once investors understand the extent to which Nvidia has gone to optimally capitalize on this AI revolution, the greater upside potential becomes more visible.

The market seems to be too fixated on the future growth potential of AI chips sales. However, Nvidia’s growth story extends well beyond hardware sales, as there is a massive opportunity in the new AI software industry for Nvidia to capitalize on, which we will cover in this article. Nvidia stock remains a “buy.”

Nvidia’s massive opportunity in the software industry

Now, to appreciate the greater upside potential from here, it is crucial to holistically understand how Nvidia has cleverly strategized and planned out the company’s positioning in the AI revolution that they anticipated before most of the world did. To fully understand how Nvidia will capitalize on this new era, we would have to go back to the comprehensive explanation CEO Jensen Huang had actually provided on the Q3 2024 Nvidia Earnings Call back in November 2023. Now, these excerpts are long, so we will break it down into two parts. The terminology may seem complex, but we will be breaking down what he is conveying in a simplified manner for all investors to understand.

There is a glaring opportunity in the world for AI foundry, and it makes so much sense. First, every company has its core intelligence. It makes up our company. Our data, our domain expertise, in the case of many companies, we create tools, and most of the software companies in the world are tool platforms, and those tools are used by people today. And in the future, it’s going to be used by people augmented with a whole bunch of AIs that we hire.

And then second, you have to have the best practice known practice, the skills of processing data through the invention of AI models to create AIs that are guardrails, fine-tuned, so on and so forth, that are safe, so on and so forth.

And the third thing is you need factories. And that’s what DGX Cloud is. Our AI models are called AI Foundations. Our process, if you will, our CAD system for creating AIs are called NeMo and they run on NVIDIA’s factories we call DGX Cloud.

Our monetization model is that with each one of our partners they rent a sandbox on DGX Cloud, where we work together, they bring their data, they bring their domain expertise, we bring our researchers and engineers, we help them build their custom AI. We help them make that custom AI incredible.

That’s a lot to process, so let’s break it down. So back in November 2023, Nvidia introduced its first AI foundry in partnership with Microsoft Azure. The AI foundry combines Nvidia’s “generative AI model technologies, LLM training expertise and giant-scale AI factory [DGX Cloud]”. Essentially, Nvidia is offering its own generative AI capabilities to enterprises through the platforms of Cloud Service Providers (CSPs), to help companies build their own AI models.

Indeed, Nvidia’s technology has become highly sought-after in the corporate world amid the AI revolution, making cloud providers willing to offer Nvidia’s unique expertise and tools in addition to their GPUs, in a bid to attract more enterprises to their cloud platforms.

Nvidia is striving hard to sustain its presence in the cloud platforms. Nvidia’s cloud endeavors are only likely to keep growing from here. Whether it continues to focus on the partnerships route, or increasingly serve enterprises directly instead over time.

And this seems to be playing out now, whereby Nvidia is not only partnering with CSPs to offer DGX Cloud, but is now partnering with leading Server OEMs like Dell to build what they call “AI factories”.

Interestingly, on each of the leading cloud providers’ earnings calls, the CEOs like to proclaim just how large the total addressable market remains for their cloud businesses, by highlighting how many businesses’ workloads still remain “on-premises.”

So the cloud providers are essentially striving to capitalize on more and more enterprises having to migrate their workloads to the cloud to be able to fully leverage the power of generative AI.

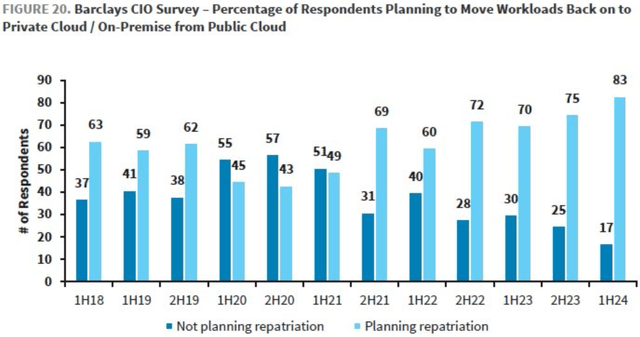

But the reality is, many businesses still remain averse to shifting to the cloud, primarily due to data security/ privacy concerns. And even the ones that do shift certain workloads to the cloud, oftentimes they are there to experiment with generative AI services, and repatriate their workloads back to on-premises.

This insight was further corroborated by a Barclays survey earlier this year revealing that 83% of CIOs plan to repatriate at least some workloads back to either private cloud or on-premises in the first half of 2024.

And this is the trend that Nvidia is now seeking to capitalize on.

Basically, they are helping enterprises build AI applications through DGX Cloud/ AI factories, taking advantage of the fact that these companies don’t want to give their data to other companies (public cloud providers).

Through AI factories/ DGX cloud, Nvidia is also taking advantage of the fact that AI talent is scarce, given the nascence of this generative AI revolution. Implementing and scaling generative AI requires the right technical talent, which currently isn’t in-house for many enterprises

Hence, companies are having to rely on expertise offered by leading tech companies, including Nvidia, to set up companies’ AI infrastructure and assist them in building AI models. This creates a beautiful opportunity for Nvidia to lock enterprises into the Nvidia ecosystem, as each company’s AI models/ software applications will be optimized to only run on Nvidia’s GPUs and hardware.

And that is just the training/ production phase. Once enterprises’ AI models/ applications have been built, they then run on Nvidia’s GPUs and use NVIDIA AI Enterprise software, which is $4,500 per GPU per year.

Now that being said, while the public cloud providers have been keen to offer Nvidia’s technology through their platforms, they will certainly striveto keep software companies on their public cloud services, and increasingly encourage them to use their own in-house hardware/ software solutions for running their software applications.

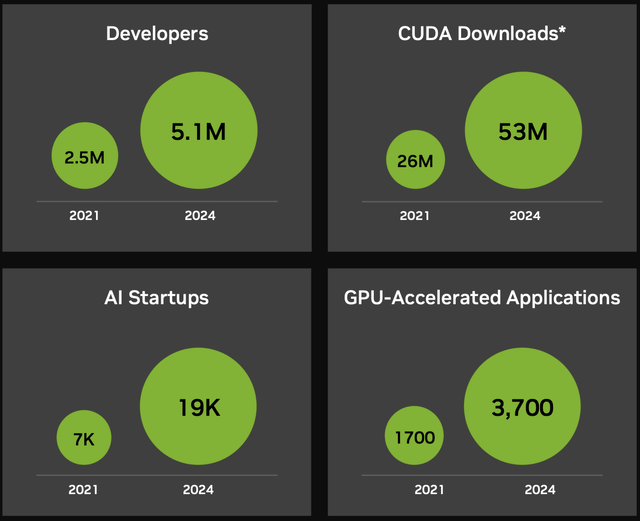

Nevertheless, Nvidia’s GPUs are not likely to fall out of favor anytime soon given the extensive partner ecosystem built around its products helping sustain its moat, including 5.1 million developers.

Additionally, Nvidia will certainly strive to take advantage of individual enterprises’ needs for optimum data security and privacy, offering them on-premises solutions by building AI factories for them.

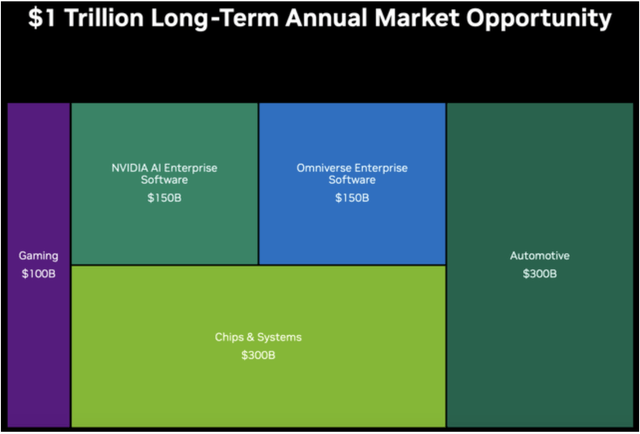

Last year, Nvidia revealed that they expect the annual market opportunity for NVIDIA AI Enterprise to be worth $150 billion, as part of the grander $1 trillion market opportunity for the entire company.

Now, how much of this total addressable market, or TAM, that Nvidia will be able to capture remains to be seen. But the more GPUs Nvidia sells now, the more AI factories Nvidia builds for enterprises across the world, the greater its share of the AI software market will be too in the future.

Will Nvidia stock have a major pullback?

Now because we are still very early in the phase of enterprises building out AI data centers, Nvidia’s sales revenue and earnings are likely to remain high.

Now once the cloud service providers, consumer internet companies and early enterprise customers are almost done building out their AI infrastructure, their purchases of Nvidia’s chips will inevitably slow from current levels. And it is at this stage, where the massive stock price pullback could occur.

We are still in the early stages of this build out, and Nvidia’s sales and earnings growth rates are likely to remain high over the next 5 years, with the EPS FWD Long-Term Growth (3-5Y CAGR) estimated to be 32.70%.

But does that mean you should sell your NVDA shares once we are approaching the end of this estimated 5-year deployment cycle?

Truth is, no one really knows how long this AI infrastructure build-out phase is going to last.

As generative AI increasingly revolutionizes more aspects of the digital economy, and new types of services arise that weren’t possible before, more and more enterprises could decide to set up AI factories of their own over the next decade, keeping demand for Nvidia’s hardware high without a major slowdown in sales revenue/ profit growth.

Furthermore, even if we do witness a notable slowdown in hardware sales in a few years’ time, Nvidia has a burgeoning software market opportunity ahead of it to capitalize on, as discussed earlier. Hence, continuous growth in NVIDIA AI Enterprise revenue could cushion any drop off in hardware sales revenue.

In fact, locking customers into the NVIDIA AI Enterprise software ecosystem should also benefit the company’s hardware business in the form of high-volume replacement cycles (i.e., data centers replacing the oldest generation chips with latest generation GPUs).

Nvidia has impressively moved to a one-year rhythm cycle, launching new generation GPUs every year. How frequently customers replace old chips with new GPUs remains to be seen.

If customers can reap the kind of Return-on-Investment figures that Jensen shared on the last earnings call, then customers could indeed be inclined to continue buying new chips to stay competitive.

As per Nvidia’s own long-term annual market opportunity projections, the tech giant expects the “Chips & Systems” total market size to be worth $300 billion per annum. Note that this does not just include AI GPUs, where Nvidia is estimated to possess “between 70% and 95% of the market,” but also adjacent hardware like networking equipment, including InfiniBand, Ethernet, switches and NICs. In a previous article, we discussed Nvidia’s savvy strategy of increasingly selling its GPUs as part of pre-built HGX supercomputers, whereby clusters of 4 or 8 AI chips are combined using InfiniBand and NVLink technology, and also includes the NVIDIA AI Enterprise software layer. This approach allows Nvidia to optimally capture as much market share as possible of the $300 billion “Chips & Systems” market ahead (as well as the AI software market), fending off competition from networking equipment manufacturers like Broadcom and Arista Networks, and of course chipmakers like AMD and Intel.

But the point is, if Nvidia generated over $47 billion in data center revenue last fiscal year (including some software revenue), and the company expects the total market opportunity for “Chips & Systems” to grow to $300 billion on an annual basis (of which it is likely to hold a dominant market share), the tech giant indeed still has multiplicative revenue growth potential ahead of it. Nvidia’s executives are clearly expecting a lot more data centers to be built out, and the chips/hardware upgrade cycle is also likely to entail high-volume orders on an annual basis if the total market opportunity is expected to be $300 billion per year.

Risks to bull case for Nvidia

Nvidia’s largest customers are designing their own chips

A favorite argument among the bears is that the tech giant’s biggest customers are also designing their own chips, which includes the major cloud providers (Microsoft Azure, Amazon AWS and Google Cloud), as well as consumer internet giant Meta Platforms.

In fact, an enterprise survey conducted by UBS recently revealed that:

Nearly 70% of respondents indicated they were using some sort of Nvidia hardware as an LLM training platform. Another 21% said they were using AWS Trainium and 4% were using Intel’s (INTC) Gaudi.

Nvidia still holds the dominant lead in the AI chips space, but the fact that Amazon’s AWS has been able to successfully encourage a growing number of its cloud customers to use its own in-house chips for training/ inferencing is certainly noteworthy.

However, this bearish argument for not buying NVDA stock is becoming weaker for several reasons.

Firstly, as CFO Colette Kress highlighted on the last earnings call (emphasis added):

Strong sequential data center growth was driven by all customer types, led by enterprise and consumer internet companies. Large cloud providers continue to drive strong growth as they deploy and ramp NVIDIA AI infrastructure at scale and represented the mid-40s as a percentage of our Data Center revenue.

While CSPs still make up almost half of Nvidia’s Data Center sales, the concentration risk in this segment is subduing as demand for Nvidia’s GPUs broadens to other customer segments.

Although that being said, the CFO also went on to highlight that the fact that the main source of “enterprise” and “consumer Internet companies” revenue came from Tesla (TSLA) and Meta Platforms (META), respectively:

Enterprises drove strong sequential growth in Data Center this quarter. We supported Tesla’s expansion of their training AI cluster to 35,000 H100 GPUs.

…

Consumer Internet companies are also a strong growth vertical. A big highlight this quarter was Meta’s announcement of Llama 3, their latest large language model, which was trained on a cluster of 24,000 H100 GPUs.

Nonetheless, the customer base for Nvidia’s GPUs should continue to diversify and broaden. As we discussed earlier in-depth, enterprises’ needs for data privacy/ security could impede their shift to cloud platforms and encourage them to stay on-premises instead. This should create immense sales growth opportunities for Nvidia in the enterprise space, as companies decide to build AI factories instead.

So while the bears argue that Nvidia’s future sales growth could be in danger of a major slowdown going forward due to CSPs designing their own chips, the opposite may actually be the case, whereby cloud providers’ future sales growth could be undermined by Nvidia building on-premises AI factories for an increasing number of enterprises, reducing their need to migrate to the cloud to take advantage of generative AI.

Speculative behavior could exacerbate stock volatility

a growing number of market participants, particularly on the retail side, are seeking leveraged exposure to NVDA through leveraged ETFs. This includes the Direxion Daily Semiconductor Bull 3X Shares ETF (SOXL), and the GraniteShares 2x Long NVDA Daily ETF (NVDL), whose Assets Under Management have grown to $12 billion and $5 billion, respectively. While such investment vehicles can deliver lucrative returns on the upside, any short-term pullbacks in NVDA stock would also multiply the losses to the downside.

As a result, weak hands that are simply seeking to ride the AI hype for quick returns are also highly likely to be the first ones to sell out of the leveraged ETFs and NVDA stock on the slightest hint of the bull run potentially coming to an end. This could exacerbate pullbacks in the stock, but also create new buying opportunities for long-term investors that comprehensively understand the growth opportunities ahead.

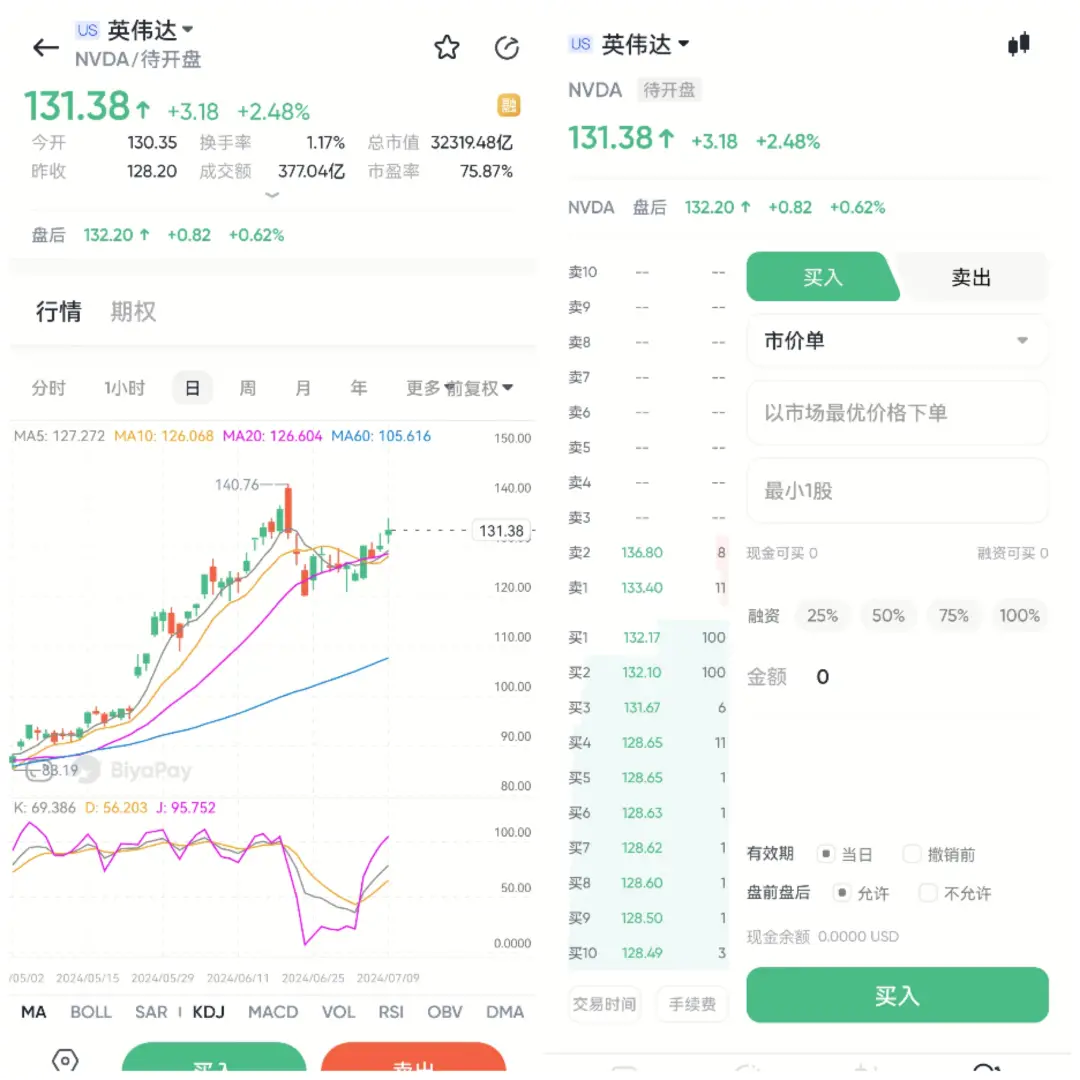

Given the possible buying opportunities in the future, you may want to go to BiyaPay, search for stock codes on the platform, monitor market trends, and wait for the right time to get on board. Of course, if you have difficulties with deposits and withdrawals, you can also use this platform as a professional tool for depositing and depositing US and Hong Kong stocks. Recharge digital currency and exchange it for US dollars or Hong Kong dollars, withdraw it to your bank account, and then deposit it to other securities firms to buy stocks. The arrival speed is fast, there is no limit, and it will not delay the market.

Nvidia’s financial performance and valuation

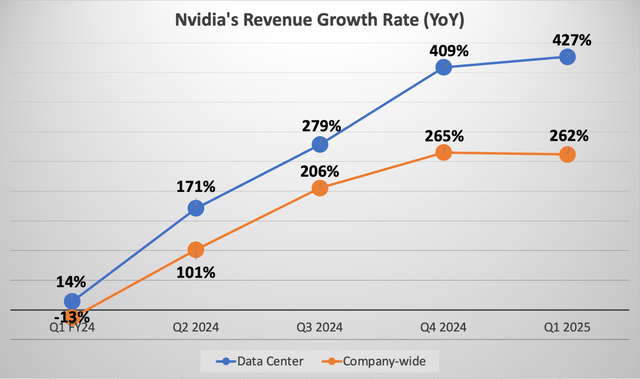

Nvidia has been delivering outstanding growth rates for its data center business on a consistent basis over the past year, growing by another 427% last quarter. And there are no signs of this slowing down as demand is broadening out beyond CSPs.

Furthermore, over the past several quarters, skeptics have been arguing that competitors like AMD and Intel could catch up to Nvidia and undermine the tech giant’s growth rates and pricing power. However, no competitor has been able to introduce technology that matches the performance of Nvidia’s GPUs/ AI platform, enabling CEO Jensen Huang to maintain immensely strong pricing power for its next-generation chips.

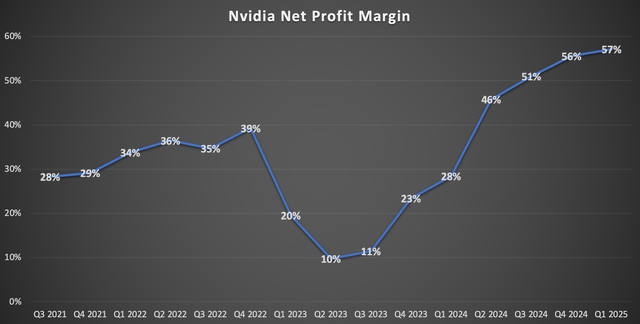

Consequently, the high top-line revenue growth rates continue to flow down to the bottom line, with diluted EPS growing by 803% over the past year, while boasting a net profit margin of 57%.

And Nvidia remains in a strong position to sustain strong pricing power with its next-generation Blackwell platform that will start shipping this year, with analysts at Jefferies confirming that “industry checks suggest further upside, with beats accelerating in FY26 as Blackwell ramps.”

With Nvidia estimated to hike the price of its new edition GPUs by around 40% over the previous generation, complemented by high-margin software revenue growth through NVIDIA AI Enterprise (as discussed earlier), the company’s top and bottom-line growth rates are unlikely to slow down any time soon.

This is clearly going to be a long-term growth story, as Nvidia is well-positioned to dominate the AI era beyond just GPUs. Although surprisingly, Nvidia stock’s valuation continues to be relatively reasonable at less than 47x forward earnings, which is roughly in-line with its 5-year average.

Market participants like to compare Nvidia’s performance to that of Cisco Systems during the dot-com era. Nonetheless, with the valuation for NVDA still reasonable at 47x forward earnings, current buyers of the stock have little to hesitate about. For context, Cisco’s valuation at its peak before the crash amid the dot-com bubble was 196x forward earnings.

Moreover, when we adjust the Forward P/E ratio by the expected future EPS growth rate to determine the stock’s Forward Price-Earnings-Growth [PEG] multiple, the stock appears attractively cheap at 1.42, which is well below its 5-year average Forward PEG ratio of 2.13.

As discussed earlier, a growing proportion of Nvidia’s revenue will compose of recurring software revenue going forward, which should also benefit hardware upgrade cycles. Hence, as the market perceives Nvidia evolving from a cyclical stock to a secular growth stock, NVDA should certainly be able to sustain a Forward PEG ratio of above 2 over the long term.

Nvidia should be trading closer to its 5-year average Forward PEG multiple of 2.13 given the company’s growth potential. This would imply 50% upside potential from here. Hence, the stock should be worth at least $190 at present.

Though keep in mind that while Nvidia’s valuation is reasonable today, if the stock climbs to an unsustainable high valuation multiple due to extremely speculative behavior (e.g., through the leveraged ETFs mentioned earlier in the Risks section), then the stock may not remain a good investment despite the anticipated AI growth story playing out over the next decade.

Summary

For now, NVDA’s valuation is still attractive relative to its growth prospects, coupled with its huge opportunities in the industry, even if there are risks associated with the bull market, it still has the potential to rise, so it is a stock worth buying.